-

chevron_right

chevron_right

Sam Thursfield: Status update, 15/06/2025

news.movim.eu / PlanetGnome • 15 June, 2025 • 7 minutes

This month I created a personal data map where I tried to list all my important digital identities.

(It’s actually now a spreadsheet, which I’ll show you later. I didn’t want to start the blog post with something as dry as a screenshot of a spreadsheet.)

Anyway, I made my personal data map for several reasons.

The first reason was to stay safe from cybercrime . In a world of increasing global unfairness and inequality, of course crime and scams are increasing too. Schools don’t teach how digital tech actually works, so it’s a great time to be a cyber criminal. Imagine being a house burglar in a town where nobody knows how doors work.

Lucky for me, I’m a professional door guy. So I don’t worry too much beyond having a really really good email password (it has numbers and letters). But its useful to double check if I have my credit card details on a site where the password is still “sam2003”.

The second reason is to help me migrate to services based in Europe . Democracy over here is what it is, there are good days and bad days, but unlike the USA we have at least more options than a repressive death cult and a fundraising business. (Shout to @angusm@mastodon.social for that one). You can’t completely own your digital identity and your data, but you can at least try to keep it close to home.

The third reason was to see who has the power to influence my online behaviour .

This was an insight from reading the book Technofuedalism . I’ve always been uneasy about websites tracking everything I do. Most of us are, to the point that we have made myths like “your phone microphone is always listening so Instagram can target adverts”. (As McSweeney’s Internet Tendency confirms, it’s not! It’s just tracking everything you type, every app you use, every website you visit, and everywhere you go in the physical world ).

I used to struggle to explain why all that tracking feels bad bad. Technofuedalism frames a concept of cloud capital , saying this is now more powerful than other kinds of capital because cloud capitalists can do something Henry Ford, Walt Disney and The Monopoly Guy can only dream of: mine their data stockpile to produce precisely targeted recommendations, search bubbles and adverts which can influence your behaviour before you’ve even noticed .

This might sound paranoid when you first hear it, but consider how social media platforms reward you for expressing anger and outrage. Remember the first time you saw a post on Twitter from a stranger that you disagreed with? And your witty takedown attracted likes and praise? This stuff can be habit-forming.

In the 20th century, ad agencies changed people’s buying patterns and political views using billboards, TV channel and newspapers. But all that is like a primitive blunderbuss compared to recommendation algorithms, feedback loops and targeted ads on social media and video apps.

I lived through the days when web search for “Who won the last election” would just return you 10 pages that included the word “election”.

(If you’re nostalgic for those days… you’ll be happy to know that

GNOME’s desktop search engine

still

works like that today!

I can spot when apps trying to ‘nudge’ me with dark patterns. But kids aren’t born with that skill, and they aren’t necessarily going to understand the

nature

of Tech Billionaire power unless we help them to see it. We need a framework to think critically and discuss the power that Meta, Amazon and Microsoft have over everyone’s lives. Schools don’t teach how digital tech actually works, but maybe a “personal data map” can be a useful teaching tool?

I can spot when apps trying to ‘nudge’ me with dark patterns. But kids aren’t born with that skill, and they aren’t necessarily going to understand the

nature

of Tech Billionaire power unless we help them to see it. We need a framework to think critically and discuss the power that Meta, Amazon and Microsoft have over everyone’s lives. Schools don’t teach how digital tech actually works, but maybe a “personal data map” can be a useful teaching tool?

By the way, here’s what my cobbled-together “Personal data map” looks like, taking into account security, what data is stored and who controls it. ( With some fake data… I don’t want this blog post to be a “How to steal my identity” guide. )

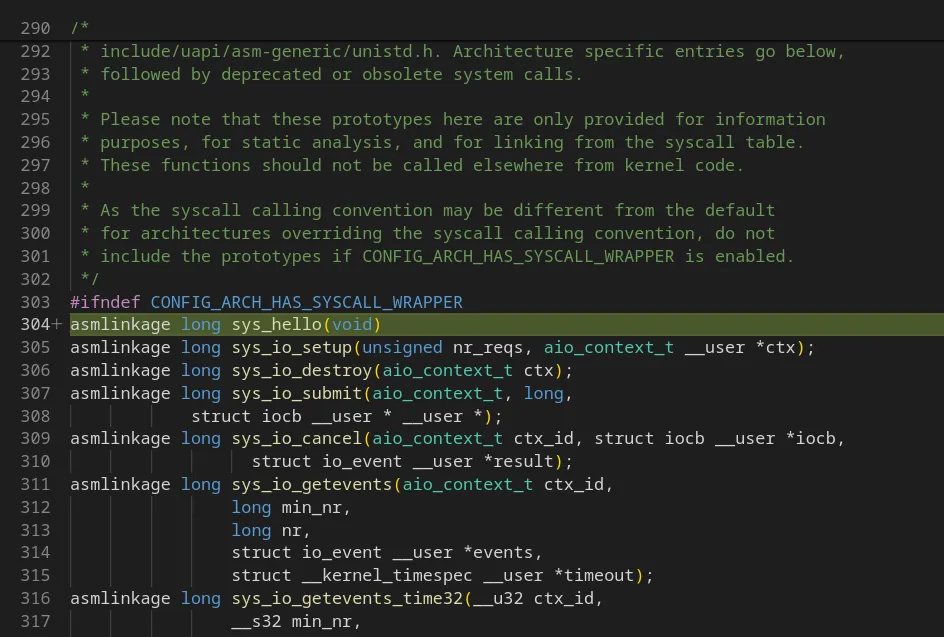

| Name | Risks | Sensitivity rating | Ethical rating | Location | Controlle r | First factor | Second factor | Credentials cached? | Data stored |

| Bank account | Financial loss | 10 | 2 | Europe | Bank | Fingerprint | None | On phone | Money, transactions |

| Identity theft | 5 | -10 | USA | Meta | Password | On phone | Posts, likes, replies, friends, views, time spent, locations, searches. | ||

| Google Mail ( sam@gmail.com ) | Reset passwords | 9 | -5 | USA | Password | None | Yes – cookies | Conversations, secrets | |

| Github | Impersonation | 3 | 3 | USA | Microsoft | Password | OTP | Yes – cookies | Credit card, projects, searches. |

How is it going migrating off USA based cloud services?

“The internet was always a project of US power”, says Paris Marx , a keynote at PublicSpaces conference , which I never heard of before.

Closing my Amazon account took an unnecessary amount of steps, and it was sad to say goodbye to the list of 12 different address I called home at various times since 2006, but I don’t miss it; I’ve been avoiding Amazon for years anyway. When I need English-language books, I get them from an Irish online bookstore named Kenny’s. (Ireland, cleverly, did not leave the EU so they can still ship books to Spain without incurring import taxes).

Dropbox took a while because I had years of important stuff in there. I actually don’t think they’re too bad of a company, and it was certainly quick to delete my account. (And my data… right? You guys did delete all my data?).

I was using Dropbox to sync notes with the Joplin notes app, and switched to the paid Joplin Cloud option , which seems a nice way to support a useful open source project.

I still needed a way to store sensitive data, and realized I have access to Protondrive. I can’t recommend that as a service because the parent company Proton AG don’t seem so serious about Linux support, but I got it to work thanks to some heroes who added a protondrive backend to rclone .

Instead of using Google cloud services to share photos, and to avoid anything so primitive as an actual cable, I learned that KDE Connect can transfer files from my Android phone over my laptop really neatly. KDE Connect is really good . On the desktop I use GSConnect which integrates with GNOME Shell really well. I think I’ve not been so impressed by a volunteer-driven open source project in years. Thanks to everyone who worked on these great apps!

I also migrated my VPS from a US-based host Tornado VPS to one in Europe. Tornado VPS (formally prgmr.com) are a great company, but storing data in the USA doesn’t seem like the way forwards.

That’s about it so far. Feels a bit better.

What’s next?

I’m not sure whats next!

I can’t leave Github and Gitlab.com, but my days of “Write some interesting new code and push it straight to Github” are long gone. I didn’t sign up to train somebody else’s LLM for free, and neither should you. (I’m still interested in sharing interesting code with nice people, of course, but let’s not make it so easy for Corporate America to take our stuff without credit or compensation. Bring back the “ sneakernet “!)

Leaving Meta platforms and dropping YouTube doesn’t feel directly useful. It’s like individually renouncing debit cards, or air travel: a lot of inconvenience for you, but the business owners don’t even notice. The important thing is to use the alternatives more . Hence why I still write a blog in 2025 and mostly read RSS feeds and the Fediverse. Gigs where I live are mostly only promoted on Instagram, but I’m sure that’s temporary.

In the first quarter of 2025, rich people put more money into AI startups than everything else put together (see: Pivot to AI ). Investors love a good bubble, but there’s also an element of power here.

If programmers only know how to write code using Copilot, then Microsoft have the power to decide what code we can and can’t write. (This currently this seems limited to not using the word ‘gender’ . But I can imagine a future where it catches you reverse-engineering proprietary software, or jailbreaking locked-down devices, or trying write a new Bittorrent client).

If everyone gets their facts from ChatGPT, then OpenAI have the power to tweak everyone’s facts, an ability that is currently limited only to presidents of major world superpowers. If we let ourselves avoid critical thinking and rely on ChatGPT to generate answers to hard questions instead, which teachers say is very much exactly what’s happening in schools now … then what?