Release Highlights:

If you are upgrading from a previous version, please check the

changes in SQL schemas

; but there aren&apost changes in the configuration, API commands or hooks.

Other contents:

Below is a detailed breakdown of the improvements and enhancements:

Matrix Gateway with Room Support

ejabberd can bridge communications to

Matrix

servers since version 24.02 thanks to

mod_matrix_gw

, but until now only one-to-one conversations were supported.

Starting with ejabberd 25.03, now you can receive invitations to Matrix rooms and join public Matrix rooms by yourself. The Matrix bridge will be seen a multi-user chat service, as default

matrix.yourdomain.net

.

For example, once you have enabled the Matrix bridge, if you wish to join the room

#ejabberd-matrix-bridge:matrix.org

, you can use XMPP MUC protocol to enter the XMPP room:

#ejabberd-matrix-bridge%matrix.org@matrix.yourdomain.net

Caveats for this release:

-

Older room protocol version are not supported yet for this release. We only support room protocol version 9, 10 and 11 for now but are planning to add support for older rooms.

-

One to one conversation will need to be restarted empty after server restart as the persistence is not yet implemented.

-

matrix room members are those who kind of subscribed to the room, not necessarily online, and

mod_matrix_gw

sends a presence for each of them, it depends on whether the xmpp client can handle thousands of muc members.

Note that

matrix.org

server has also declared an XMPP service in its DNS entries. To communicate with the real Matrix server, you need to block it and add this rule in your firewall on your ejabberd instance:

iptables -A OUTPUT -d lethe.matrix.org -j REJECT

As a reminder, as encrypted payloads are different in Matrix and XMPP, Matrix payload cannot be end-to-end encrypted. In the future, it could be possible to join Matrix encrypted room, with the decryption happening on the server in the bridge, but it will not be end-to-end encrypted anymore. It would just be a convenience for those trusting their XMPP server. Please, let us know if this is an option you would like to see in the future.

Support Multiple Simultaneous Password Types

Faithful to our commitment to help gradually ramp up messaging security, we added the ability to store passwords in multiple formats per account. This feature should help with migration to newer, more secure authentication methods. Using the option

auth_stored_password_types

, you can specify in what formats the password will be stored in the database. And the stored passwords will be updated each time user changes the password or when the user&aposs client provides the password in a new format using

SASL Upgrade Tasks

XEP specification.

This option takes a list of values, currently recognized ones are

plain

,

scram_sha1

,

scram_sha256

,

scram_sha512

. When this options is set, it overrides old options that allowed to specify password storage -

auth_scream_hash

and

auth_password_format

.

Update SQL Schema

This release requires SQL database schema update to allow storage of multiple passwords per user. This task can be performed automatically by ejabberd, if your config has enabled

update_sql_schema

toplevel option.

If you prefer to perform the SQL schema update manually yourself, check the corresponding instructions, depending if your config has enabled

new_sql_schema

:

ALTER TABLE users ADD COLUMN type smallint NOT NULL DEFAULT 0;

ALTER TABLE users ALTER COLUMN type DROP DEFAULT;

ALTER TABLE users DROP PRIMARY KEY, ADD PRIMARY KEY (username(191), type);

ALTER TABLE users ADD COLUMN type smallint NOT NULL DEFAULT 0;

ALTER TABLE users ALTER COLUMN type DROP DEFAULT;

ALTER TABLE users DROP PRIMARY KEY, ADD PRIMARY KEY (server_host(191), username(191), type);

-

PostgreSQL default schema:

ALTER TABLE users ADD COLUMN "type" smallint NOT NULL DEFAULT 0;

ALTER TABLE users ALTER COLUMN type DROP DEFAULT;

ALTER TABLE users DROP CONSTRAINT users_pkey, ADD PRIMARY KEY (username, type);

ALTER TABLE users ADD COLUMN "type" smallint NOT NULL DEFAULT 0;

ALTER TABLE users ALTER COLUMN type DROP DEFAULT;

ALTER TABLE users DROP CONSTRAINT users_pkey, ADD PRIMARY KEY (server_host, username, type);

ALTER TABLE users ADD COLUMN type smallint NOT NULL DEFAULT 0;

CREATE TABLE new_users (

username text NOT NULL,

type smallint NOT NULL,

password text NOT NULL,

serverkey text NOT NULL DEFAULT &apos&apos,

salt text NOT NULL DEFAULT &apos&apos,

iterationcount integer NOT NULL DEFAULT 0,

created_at timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP,

PRIMARY KEY (username, type)

);

INSERT INTO new_users SELECT * FROM users;

DROP TABLE users;

ALTER TABLE new_users RENAME TO users;

ALTER TABLE users ADD COLUMN type smallint NOT NULL DEFAULT 0;

CREATE TABLE new_users (

username text NOT NULL,

server_host text NOT NULL,

type smallint NOT NULL,

password text NOT NULL,

serverkey text NOT NULL DEFAULT &apos&apos,

salt text NOT NULL DEFAULT &apos&apos,

iterationcount integer NOT NULL DEFAULT 0,

created_at timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP,

PRIMARY KEY (server_host, username, type)

);

INSERT INTO new_users SELECT * FROM users;

DROP TABLE users;

ALTER TABLE new_users RENAME TO users;

New mod_adhoc_api module

You may remember this paragraph from the

ejabberd 24.06 release notes

:

ejabberd already has around 200 commands to perform many administrative tasks, both to get information about the server and its status, and also to perform operations with side-effects. Those commands have its input and output parameters clearly described, and also documented.

Almost a year ago, ejabberd WebAdmin got support to execute all those 200

API commands

... and now your XMPP client can execute them too!

The new

mod_adhoc_api

ejabberd module allows to execute all the ejabberd API commands using a XMPP client that supports

XEP-0050 Ad-Hoc Commands

and

XEP-0030 Service Discovery

.

Simply add this module to

modules

, setup

api_permissions

to grant some account permission to execute some command, or tags of commands, or all commands.

Reload

the ejabberd configuration and login with your client to that account.

Example configuration:

acl:

admin:

user: jan@localhost

api_permissions:

"adhoc commands":

from: mod_adhoc_api

who: admin

what:

- "[tag:roster]"

- "[tag:session]"

- stats

- status

modules:

mod_adhoc_api:

default_version: 2

Now you can execute the same commands in the command line, using ReST, in the WebAdmin, and in your XMPP client!

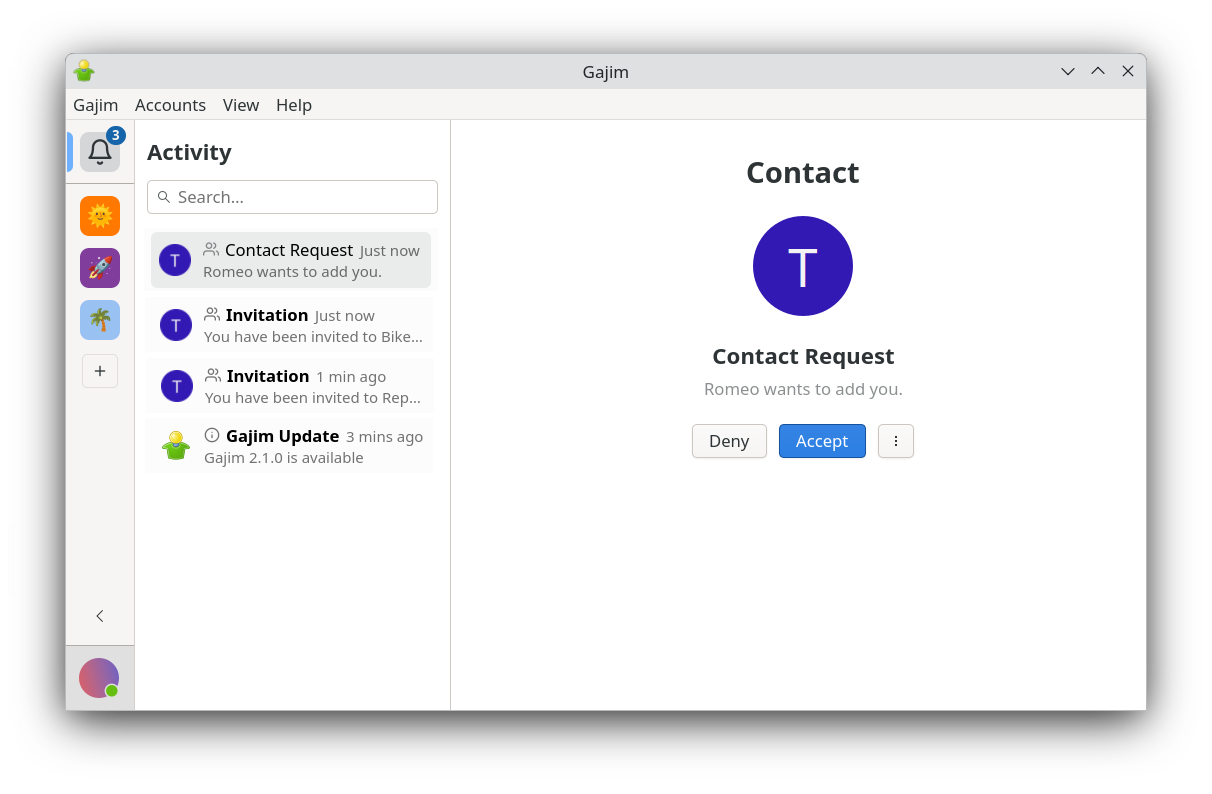

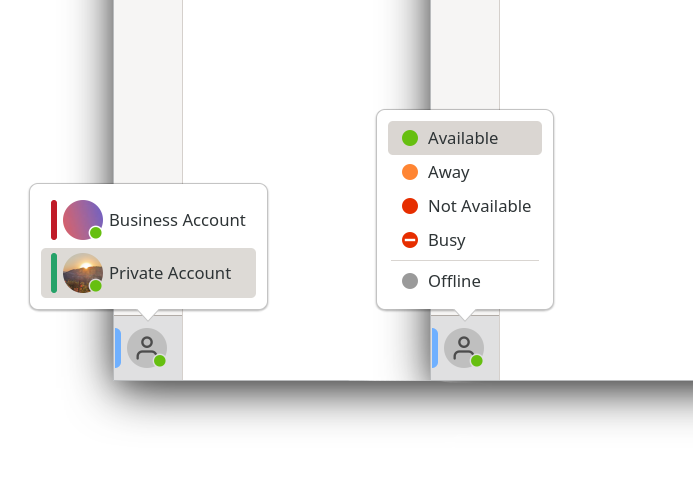

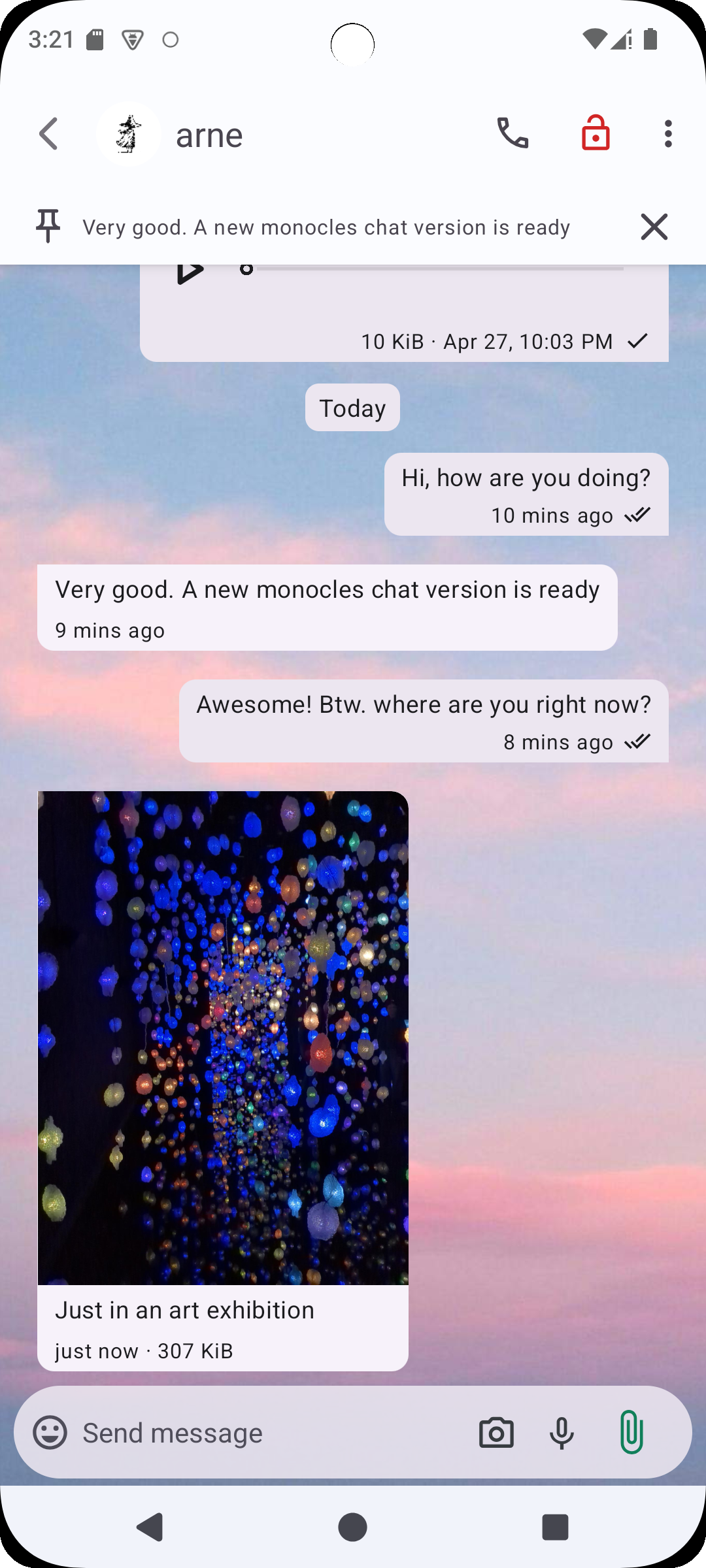

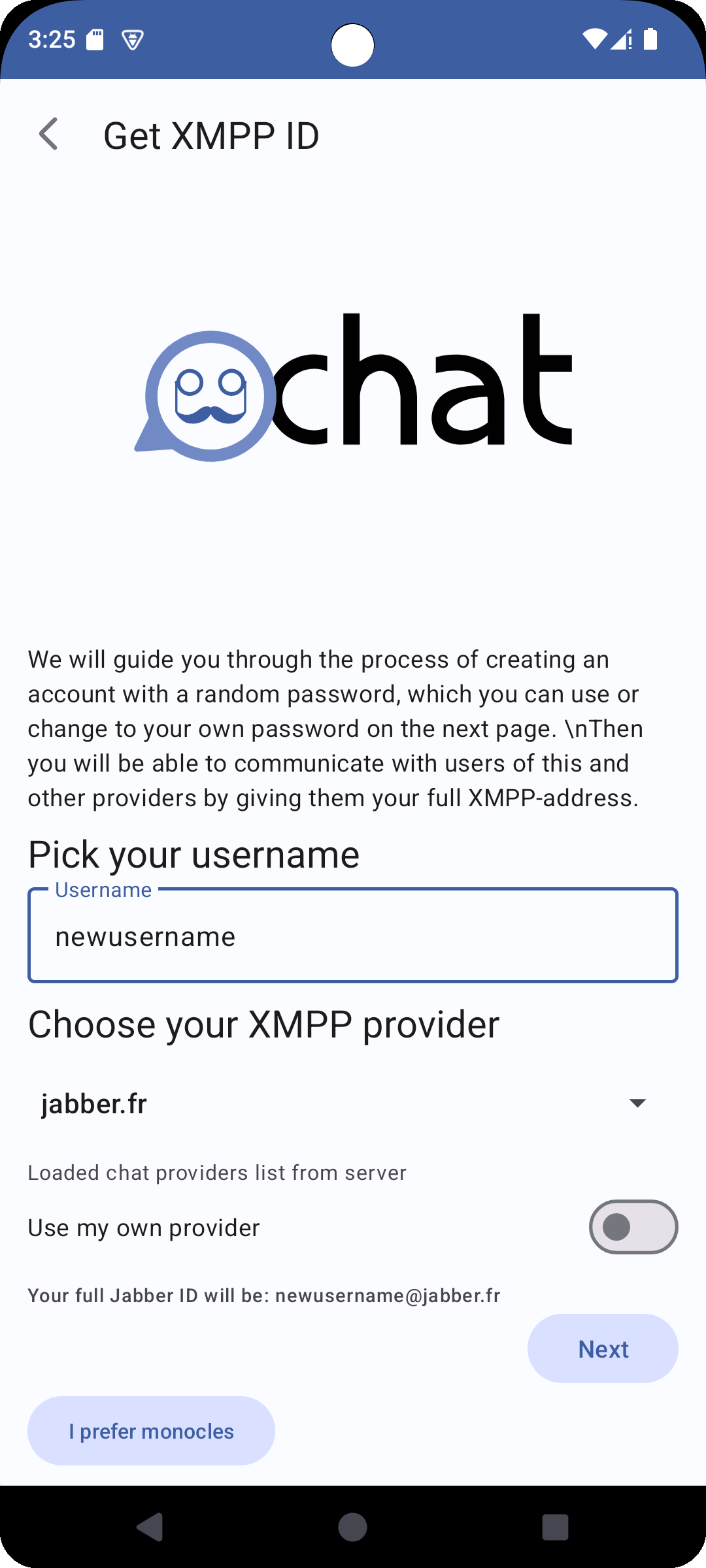

This feature has been tested with Gajim, Psi, Psi+ and Tkabber. Conversejs allows to list and execute the commands, but doesn&apost show the result to the user.

Macros and Keyword improvements

Some options in ejabberd supported the possibility to use hard-coded keywords. For example, many modules like

mod_vcard

could used

HOST

in their

hosts

option. Other example is the

captcha_cmd

toplevel option: it could use

VERSION

and

SEMVER

keywords. All this was implemented for each individual option.

Now those keywords are predefined and can be used by any option, and this is implemented in ejabberd core, no need to implement the keyword substitution in each option. The

predefined keywords

are:

HOST

,

HOME

,

VERSION

and

SEMVER

.

For example, this configuration is now possible without requiring any specific implementation in the option source code:

ext_api_url: "http://example.org/@VERSION@/api"

Additionally, now you can define your own keywords, similarly to how macros are defined:

define_keyword:

SCRIPT: "captcha.sh"

captcha_cmd: "tools/@SCRIPT@"

And finally, now macros can be used inside string options, similarly to how keywords can be used:

define_macro:

SCRIPT: "captcha.sh"

captcha_cmd: "tools/@SCRIPT@"

In summary, now macros and keywords can be defined and used very similarly, so you may be wondering what are their differences. That is explained in detail in the new section

Macros and Keywords

:

-

Macros are implemented by the

yconf

library: macros cannot be defined inside

host_config

.

-

Keywords are implemented by ejabberd itself: keywords can be defined inside

host_config

but only for usage in module options. And cannot be used in those toplevel options:

hosts

,

loglevel

,

version

.

ejabberdctl: New option CTL_OVER_HTTP

The

ejabberdctl

script is useful not only to start and stop ejabberd, it can also execute the ~200 ejabberd

API commands

inside the running ejabberd node. For this, the script starts another erlang virtual machine and connects it to the already existing one that is running ejabberd.

This connection method is acceptable for performing a few administrative tasks (reload configuration, register an account, etc). However, ejabberdctl is noticeably slow for performing multiple calls, for example to register 1000 accounts. In that case, it is preferable to use other

API frontend

like mod_http_api or ejabberd_xmlrpc.

And now ejabberdctl can do exactly this! ejabberdctl can be configured to use an HTTP connection to execute the command, which is way faster than starting an erlang node, around 20 times faster.

To enable this feature, first configure in

ejabberd.yml

:

listen:

-

port: "unix:sockets/ctl_over_http.sock"

module: ejabberd_http

unix_socket:

mode: &apos0600&apos

request_handlers:

/ctl: ejabberd_ctl

Then enable the

CTL_OVER_HTTP

option in

ejabberdctl.cfg

:

CTL_OVER_HTTP=sockets/ctl_over_http.sock

Let&aposs register 100 accounts using the standard method and later using CTL_OVER_HTTP:

$ time for (( i=100 ; i ; i=i-1 )) ; do ejabberdctl register user-standard-$i localhost pass; done

...

real 0m43,929s

user 0m41,878s

sys 0m10,558s

$ time for (( i=100 ; i ; i=i-1 )) ; do CTL_OVER_HTTP=sockets/ctl_over_http.sock ejabberdctl register user-http-$i localhost pass; done

...

real 0m2,144s

user 0m1,377s

sys 0m0,566s

This feature is enabled by default in the

ejabberd

container image.

mod_configure: New option access

mod_configure

always had support to configure what accounts can access its features: using the

configure

access rule

. The name of that access rule was hard-coded. Now, thanks to the new

access

option, that can be configured.

Container images: Reduce friction, use macros, WebAdmin port

Several improvements are added in the

ejabberd

and

ecs

container images

to allow easier migration from one to the other. This also allows to use the same documentation file for both container images, as now there are very few usability differences between both images. Also, a new

comparison table

in that documentation describes all the differences between both images. The improvements are:

-

Adds support for paths from

ecs

into

ejabberd

container image, and viceversa:

/opt/

linked to

/home/

and

/usr/local/bin/

linked to

/opt/ejabberd/bin/

-

Include the

ejabberdapi

binary also in the

ejabberd

container image, as does

ecs

-

Copy captcha scripts to immutable path

/usr/local/bin/

for easy calling, and it&aposs included in

$PATH

-

Copy sql files to

/opt/ejabberd/database/sql/

-

Copy sql also to

/opt/ejabberd/database/

for backwards compatibility with

ecs

-

Link path to Mnesia spool dir for backwards compatibility

-

CONTAINER.md

now documents both images, as there are few differences. Also includes a comparison table

Macros are used in the default

ejabberd.yml

configuration files to define host, admin account and port numbers. This way you can overwrite any of them at starttime using

environment variables

:

env:

- name: PORT_HTTP_TLS

value: 5444

If you use the

podman-desktop

or

docker-desktop

applications, you may have noticed they show a button named "Open Browser". When you click that button, it opens a web browser with

/

URL and the lowest exposed port number. Now the default

ejabberd.yml

configuration file listens in port number 1880, the lowest of all, so the "Open Browser" button will open directly the ejabberd WebAdmin page.

ejabberd container image: admin account

In the

ejabberd

container image, you can grant admin rights to an account using the

EJABBERD_MACRO_ADMIN

environment variable. Additionally, if you set the

REGISTER_ADMIN_PASSWORD

environment variable, that account is automatically registered.

Example kubernetes yaml file in podman:

env:

- name: EJABBERD_MACRO_ADMIN

value: administrator@example.org

- name: REGISTER_ADMIN_PASSWORD

value: somePass0rd

When those environment variables are not set, admin rights are granted to a random account name in the default

ejabberd.yml

.

Alternatively, this can be done with the existing

CTL_ON_CREATE

variable, and then you would need to modify

ejabberd.yml

accordingly:

env:

- name: CTL_ON_CREATE

value: register administrator example.org somePass0rd

Unix Domain Socket: Relative path

There are several minor improvements in the Unix Domain Socket support, the most notable being support for socket relative path: if the

port

option is set to

"unix:directory/filename"

without absolute path, then the directory and file are created in the Mnesia spool directory.

Privileged Entity Bugfixes

Two bugs related to XEP-0356: Privileged Entity have been solved:

Don&apost rewrite "self-addressed" privileged IQs as results

process_privilege_iq

is meant to rewrite the result of a privileged IQ into the forwarded form required by XEP-0356 so it can be routed back to the original privileged requester. It checks whether the impersonated JID (

ReplacedJid

) of the original request matches the recipient of the IQ being processed to determine if this is a response to a privileged IQ (assuming it has privileged-IQ metadata attached).

Unfortunately, it doesn&apost check the packet type, and this check will also match a privileged-IQ

request

that is being sent to the same user that&aposs being impersonated. This results in the request itself being rewritten and forwarded back to the sending component, instead of being processed and having the result send back.

Instead, just check for IQ results (either a regular result or an error), and as long as it is marked as being a response to a privileged-IQ, always rewrite it and forward it to the sending component. There&aposs no circumstance under which we

shouldn&apost

forward a privileged-IQ response, so we don&apost need to be tricky about checking whether impersonated-user and recipient match.

Accept non-privileged IQs from privileged components

mod_privilege

current drops any non-privileged IQ received from a component with an error about it not being properly wrapped. While this might represent a mistake on the part of the component, it means that well- behaved components can no longer send non-privileged IQs (something they normally can do if mod_privilege isn&apost enabled).

Since mod_privilege is intended to grant additional permissions, and not remove existing ones, route non-privileged IQs received from the component normally.

This also removes the special-case for roster-query IQ stanzas, since those are also non-privileged and will be routed along with any other non-privileged IQ packet. This mirrors the privileged-IQ/everything-else structure of the XEP, which defined the handling of privileged IQ stanzas and leaves all other IQ stanzas as defined in their own specs.

To make this clearer, the predicate function now returns distinct results indicating privileged IQs, non-privileged IQs, and error conditions, rather than treating non-privilege IQs as an error that gets handled by routing the packet normally.

mod_muc_occupantid: Enable in the default configuration

mod_muc_occupantid

was added to the list of modules enabled in the sample configuration file

ejabberd.yml.example

.

It&aposs not necessarily obvious that it&aposs required for using certain modern features in group chat, and there&aposs no downside in activating this module.

mod_http_api returns sorted list elements

When

mod_http_api

returns a list of elements, now those elements are sorted alphabetically. If it is a list of tuples, the tuples are sorted alphabetically by the first element in that tuple.

Notice that the new module

mod_adhoc_api

uses internally

mod_http_api

to format the API command arguments and result, this means that

mod_adhoc_api

benefits from this feature too.

create_room_with_opts API command separators

One of the arguments accepted by the

create_room_with_opts

API command is a list of room options, expressed as tuples of option name and option value. And some room option values are also list of tuples! This is the case of

affiliations

and

subscribers

.

That is not a problem for API frontends that accept structured arguments like

mod_http_api

and

ejabberd_xmlrpc

. But this is a problem in

ejabberdctl

,

mod_adhoc_api

and WebAdmin, because they don&apost use structured arguments, and instead separate list elements with

,

and tuple elements with

:

. In that case, a list of tuples of list of tuples cannot be parsed correctly if all them use the same separators.

Solution: when using the

create_room_with_opts

command to set

affiliations

and

subscribers

options:

-

list elements were separated with

,

and now should be with

;

-

tuple elements were separated with

:

and now should be with

=

All the previous separators are still supported for backwards compatibility, but please use the new recommended separators, specially if using

ejabberdctl

,

mod_adhoc_api

and WebAdmin.

Let&aposs see side by side the old and the new recommended syntax:

affiliations:owner:user1@localhost,member:user2@localhost

affiliations:owner=user1@localhost;member=user2@localhost

In a practical example, instead of this (which didn&apost work at all):

ejabberdctl \

create_room_with_opts \

room_old_separators \

conference.localhost \

localhost \

"persistent:true,affiliations:owner:user1@localhost,member:user2@localhost"

please use:

ejabberdctl \

create_room_with_opts \

room_new_separators \

conference.localhost \

localhost \

"persistent:true,affiliations:owner=user1@localhost;member=user2@localhost"

Notice that both the old and new separators are supported by

create_room_with_opts

. For example, let&aposs use

curl

to query

mod_http_api

:

curl -k -X POST -H "Content-type: application/json" \

"http://localhost:5280/api/create_room_with_opts" \

-d &apos{"name": "room_old_separators",

"service": "conference.localhost",

"host": "localhost",

"options": [

{"name": "persistent",

"value": "true"},

{"name": "affiliations",

"value": "owner:user1@localhost,member:user2@localhost"}

]

}&apos

curl -k -X POST -H "Content-type: application/json" \

"http://localhost:5280/api/create_room_with_opts" \

-d &apos{"name": "room_new_separators",

"service": "conference.localhost",

"host": "localhost",

"options": [

{"name": "persistent",

"value": "true"},

{"name": "affiliations",

"value": "owner=user1@localhost;member=user2@localhost"}

]

}&apos

New API commands to change Mnesia table storage

There are two new API commands:

mnesia_list_tables

and

mnesia_table_change_storage

.

In fact those commands were already implemented since ejabberd 24.06, but they were tagged as

internal

as they were only used by WebAdmin. Now they are available for any API frontend, including

mod_adhoc_api

.

Erlang/OTP and Elixir versions support

Let&aposs review the supported

Erlang/OTP

versions:

-

Erlang/OTP

20.0 up to 24.3

are discouraged: ejabberd 25.03 is the last ejabberd release that fully supports those old erlang versions. If you are still using any of them, please upgrade it before the next ejabberd release.

-

Erlang/OTP

25.0 up to 27.3

are the recommended versions. For example

Erlang/OTP 27.3

is used in the ejabberd binary installers and

ejabberd

container image.

-

Erlang/OTP

28.0-rc2

is mostly supported, but not yet recommended for production deployments.

Regarding

Elixir

supported versions:

-

Elixir

1.10.3 up to 1.12.3

are discouraged: ejabberd compilation is not tested with those old Elixir versions.

-

Elixir

1.13.4 up to 1.18.3

are the recommended versions; for instance Elixir

1.18.3

is used in the ejabberd binary installers and container images.

Acknowledgments

We would like to thank the contributions to the source code, documentation, and translation provided for this release by:

And also to all the people contributing in the ejabberd chatroom, issue tracker...

Improvements in ejabberd Business Edition

Customers of the

ejabberd Business Edition

, in addition to all those improvements and bugfixes, also get the floowing fixes

-

Fix

mod_unread

with s2s messages

-

Fix logic detecting duplicate pushes to not trigger pushes on other backends

-

Fix issue with connection to Apple push servers for APNS delivery

-

Fix

server_info

commands when a cluster node is not available

ChangeLog

This is a more detailed list of changes in this ejabberd release:

Commands API

-

ejabberdctl

: New option

CTL_OVER_HTTP

(

#4340

)

-

ejabberd_web_admin

: Support commands with tuple arguments

-

mod_adhoc_api

: New module to execute API Commands using Ad-Hoc Commands (

#4357

)

-

mod_http_api

: Sort list elements in a command result

-

Show warning when registering command with an existing name

-

Fix commands unregistration

-

change_room_option

: Add forgotten support to set

enable_hats

room option

-

change_room_option

: Verify room option value before setting it (

#4337

)

-

create_room_with_opts

: Recommend using

;

and

=

separators

-

list_cluster_detailed

: Fix crash when a node is down

-

mnesia_list_tables

: Allow using this internal command

-

mnesia_table_change_storage

: Allow using this internal command

-

status

: Separate command result with newline

-

update_sql

: Fix updating tables created by ejabberd internally

-

update_sql

: Fix MySQL support

Configuration

-

acl

: Fix bug matching the acl

shared_group: NAME

-

define_keyword

: New option to define keywords (

#4350

)

-

define_macro

: Add option to

globals()

because it&aposs useless inside

host_config

-

ejabberd.yml.example

: Enable

mod_muc_occupantid

by default

-

Add support to use keywords in toplevel, listener and modules

-

Show warning also when deprecated listener option is set as disabled (

#4345

)

Container

-

Bump versions to Erlang/OTP 27.3 and Elixir 1.18.3

-

Add

ERL_FLAGS

to compile elixir on qemu cross-platform

-

Copy files to stable path, add ecs backwards compatibility

-

Fix warning about relative workdir

-

Improve entrypoint script: register account, or set random

-

Link path to Mnesia spool dir for backwards compatibility

-

Place

sockets/

outside

database/

-

Use again direct METHOD, qemu got fixed (

#4280

)

-

ejabberd.yml.example

: Copy main example configuration file

-

ejabberd.yml.example

: Define and use macros in the default configuration file

-

ejabberd.yml.example

: Enable

CTL_OVER_HTTP

by default

-

ejabberd.yml.example

: Listen for webadmin in a port number lower than any other

-

ejabberdapi

: Compile during build

-

CONTAINER.md

: Include documentation for ecs container image

Core and Modules

-

ejabberd_auth

: Add support for

auth_stored_password_types

-

ejabberd_router

: Don&apost rewrite "self-addressed" privileged IQs as results (

#4348

)

-

misc

: Fix json version of

json_encode_with_kv_list

for nested kv lists (

#4338

)

-

OAuth: Fix crashes when oauth is feed with invalid jid (

#4355

)

-

PubSub: Bubble up db errors in

nodetree_tree_sql:set_node

-

mod_configure

: Add option

access

to let configure the access name

-

mod_mix_pam

: Remove

Channels

roster group of mix channels (

#4297

)

-

mod_muc

: Document MUC room option vcard_xupdate

-

mod_privilege

: Accept non-privileged IQs from privileged components (

#4341

)

-

mod_private

: Improve exception handling

-

mod_private

: Don&apost warn on conversion errors

-

mod_private

: Handle invalid PEP-native bookmarks

-

mod_private

: Don&apost crash on invalid bookmarks

-

mod_s2s_bidi

: Stop processing other handlers in s2s_in_handle_info (

#4344

)

-

mod_s2s_bidi

: Fix issue with wrong namespace

Dependencies

-

ex_doc

: Bump to 0.37.2

-

stringprep

: Bump to 1.0.31

-

provider_asn1

: Bump to 0.4.1

-

xmpp

Bump to bring fix for ssdp hash calculation

-

xmpp

Bump to get support for webchat_url (

#3041

)

-

xmpp

Bump to get XEP-0317 Hats namespaces version 0.2.0

-

xmpp

Bump to bring SSDP to XEP version 0.4

-

yconf

Bump to support macro inside string

Development and Testing

-

mix.exs

: Keep debug info when building

dev

release

-

mix.exs

: The

ex_doc

dependency is only relevant for the

edoc

Mix environment

-

ext_mod

: add

$libdir/include

to include path

-

ext_mod

: fix greedy include path (

#4359

)

-

gen_mod

: Support registering commands and

hook_subscribe

in

start/2

result

-

c2s_handle_bind

: New event in

ejabberd_c2s

(

#4356

)

-

muc_disco_info_extras

: New event

mod_muc_room

useful for

mod_muc_webchat_url

(

#3041

)

-

VSCode: Fix compiling support

-

Add tests for config features

define_macro

and

define_keyword

-

Allow test to run using

ct_run

-

Fixes to handle re-running test after

update_sql

-

Uninstall

mod_example

when the tests has finished

Documentation

-

Add XEPs that are indirectly supported and required by XEP-0479

-

Document that XEP-0474 0.4.0 was recently upgraded

-

Don&apost use backtick quotes for ejabberd name

-

Fix values allowed in db_type of mod_auth_fast documentation

-

Reword explanation about ACL names and definitions

-

Update moved or broken URLs in documentation

Installers

-

Bump Erlang/OTP 27.3 and Elixir 1.18.3

-

Bump OpenSSL 3.4.1

-

Bump crosstool-NG 1.27.0

-

Fix building Termcap and Linux-PAM

Matrix Gateway

-

Preserve XMPP message IDs in Matrix rooms

-

Better Matrix room topic and room roles to MUC conversion, support room aliases in invites

-

Add

muc#user

element to presences and an initial empty subject

-

Fix

gen_iq_handler:remove_iq_handler

call

-

Properly handle IQ requests

-

Support Matrix room aliases

-

Fix handling of 3PI events

Unix Domain Socket

-

Add support for socket relative path

-

Use

/tmp

for temporary socket, as path is restricted to 107 chars

-

Handle unix socket when logging remote client

-

When stopping listener, delete Unix Domain Socket file

-

get_auto_url

option: Don&apost build auto URL if port is unix domain socket (

#4345

)

Full Changelog

https://github.com/processone/ejabberd/compare/24.12...25.03

ejabberd 25.03 download & feedback

As usual, the release is tagged in the Git source code repository on

GitHub

.

The source package and installers are available in

ejabberd Downloads

page. To check the

*.asc

signature files, see

How to verify ProcessOne downloads integrity

.

For convenience, there are alternative download locations like the

ejabberd DEB/RPM Packages Repository

and the

GitHub Release / Tags

.

The

ecs

container image is available in

docker.io/ejabberd/ecs

and

ghcr.io/processone/ecs

. The alternative

ejabberd

container image is available in

ghcr.io/processone/ejabberd

.

If you consider that you&aposve found a bug, please search or fill a bug report on

GitHub Issues

.

chevron_right

chevron_right